In the video below, Dr. Thomas Reese introduces himself and what sparked his interest in application design.

Human Factors Engineering and Human-Centered Design

One may assume design refers to how something looks, but it also applies to how something functions. Human Factors Engineering (HFE) focuses on interactions among humans and other elements in a system, applying theories, principles, data, and methods to design to optimize human well-being and overall system performance. Here we focus on HFE to design technologies, though these principles and methods can be applied to design all aspects of a system. Human-centered design (HCD) is a HFE methodology which aims to make interactive systems more usable through the engagement of users and human factors principles throughout the design process. As Dr. Reese describes in the video below, HCD incorporates five basic principles: 1) Design is based on understanding of users, task, and environments, 2) Users are involved throughout design and development, 3) Design is driven and refined by user-centered evaluation, 4) The design process is iterative, and 5) The design involves a multidisciplinary team.

Understanding Environment of Use and Defining User Needs

The first two steps of human-centered design include understanding the environment of use and defining user needs, which primarily rely upon qualitative research methods. In the video below, Dr. Salwei explains how understanding the environment of use often involves making observations and taking notes about the environment and any challenges a user may face while performing a technology-related task. Conducting interviews can be another effective means for gathering information, especially through the use of open-ended questions. Qualitative data analysis can then be applied to the written transcripts to help identify problems and develop recommendations.

Iterative Design

Iterative design involves repeating a sequence of steps until a desired outcome is achieved. This includes revising and refining prototypes as new information is obtained to ensure that whatever is being developed really meets the user’s needs. This process involves first assembling a multidisciplinary team including real users, developing ground rules (how conflicting idea will be handled, how to decide if something is out of scope), and then conducting multiple group meetings to brainstorm, sketch, and prototype designs while systematically applying human factors design principles throughout. In the video below, Dr. Salwei describes 14 high-level examples of human factors design principles, including things like consistency for layout and position, color, sequence of actions, and terminology, and minimalism including the removal of any extraneous information to avoid distractions and improve efficiency. These principles can be applied through heuristic evaluations to systematically identify any violations of the design principles.

Evaluations - Task Success and Efficiency

Formative evaluations focus on determining which aspects of the design work well and why. These occur throughout the design process and provide information to incrementally improve the interface. Summative evaluations describe how well a design performs and are often used to compare to a prior version of the design or a competitor. Unlike a formative evaluation where the goal is to inform the design process, summative evaluations are for assessing the big picture and overall experience of a finished product. Evaluating user experience is usually referred to as usability, which is defined as the extent to which a product can be used be specified users to achieve specified goals with effectiveness, efficiency, and satisfaction in a specified context of use. Effectiveness is a measure of whether or not a user was able to complete a given task, while efficiency describes how long it took for the user to complete that task. To measure these dimensions accurately, the task must be defined and broken down into discrete activities, sometimes requiring software that allows a user to skip activities if needed. Efficiency can be measured by time on task or assessing the number of actions or steps taken to complete a task. In the video below, Dr. Reese walks through an example task and how it’s discrete parts could be scored for success and the results interpreted.

Evaluations - Self-Reported Metrics

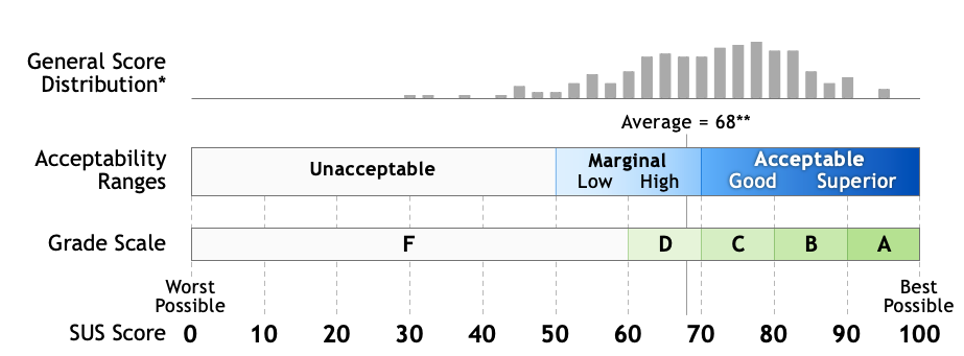

Self-reported metrics give you the most important information about a user’s perception of the system and are often collected as post-session ratings. In the video below, Dr. Reese describes two well-known and easy-to-use questionnaires, the System Usability Scale (SUS) and the Questionnaire for User Interface Satisfaction (QUIS). The SUS includes 10 positive and negative statements combined with a Likert Scale, and the final score can be used to determine the usability of a tool without a comparison. The QUIS consists of 27 rating scales divided into five categories and ratings are on a 10-point scale with anchors that change depending on the statement. The scoring of the questionnaire is not freely available though, and does need to be licensed to receive the standardized results. Dr. Reese also provides some guidance for determining the right metric to use in various circumstances.

Usability Exercise – System Usability Scale

The following exercise will take about 5 minutes to complete. It will give you real-world experience using the System Usability Scale to evaluate an application for shared decision making.

- Consider the following scenario: 27-year-old female presents with chief complaint of depression that is characterized as hypersomnia (sleeps all the time), low energy (too difficult to go out with friends), and poor concentration (can’t finish a grocery list). She is currently unemployed and concerned about gaining weight.

- Diagnosis: major depression

- Past medical history: seizures

- Plan: start antidepressant

- Access the application (here)

- Work through the process of choosing a prescription medication either from the patient or provider perspective.

- Complete the System Usability Scale (here)